Scala configuration:

- Spark Scala Documentation

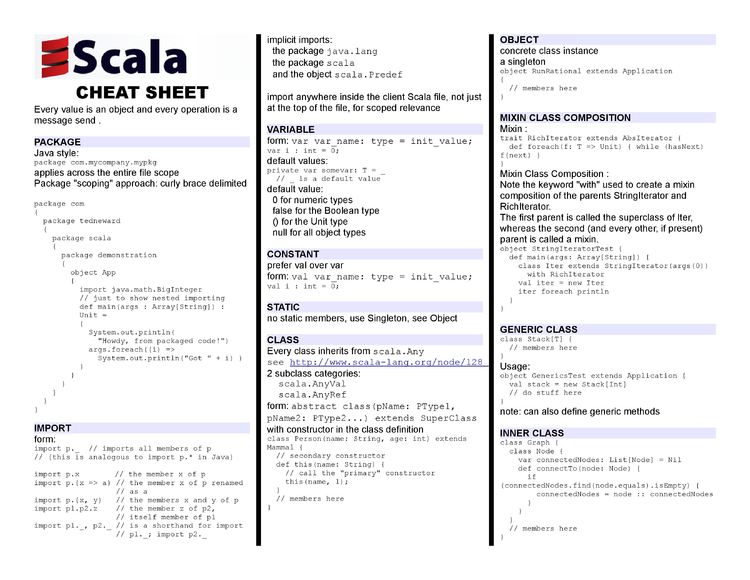

- Scala Cheatsheet

- Spark Rdd Cheat Sheet Scala

- Spark Sql Scala Cheat Sheet

- Scala Spark Sql

- Spark Scala Version

- Spark Scala Cheat Sheet Pdf

- To make sure scala is installed

Scala configuration: To make sure scala is installed $ scala -version Installation destination $ cd downloads Download zip file of spark $ tar xvf spark-2.3.0-bin-hadoop2.7.tgz Sourcing the. Although there are a lot of resources on using Spark with Scala, I couldn’t find a halfway decent cheat sheet except for the one here on Datacamp, but I thought it needs an update and needs to be just a bit more extensive than a one-pager. First off, a decent introduction on how Spark works —.

$ scala -version

- Installation destination

$ cd downloads

- Download zip file of spark

$ tar xvf spark-2.3.0-bin-hadoop2.7.tgz

- Sourcing the ~/.bashrc file

$ open ~/.bashrc

In .bashrc file, write this

$ source~/.bashrc

- Run scala

$ spark-shell

Scala cheat sheet:

https://www.tutorialspoint.com/scala/scala_basic_syntax.htm #best

Scala Example:

- To save the Scala codes in sublime text with file name test_2.scala

- To compile the program

$ scalac test_2.scala

- To run the program

$ scala test_2.scala

Spark configuration:

$ conda install spark

Scala ide code sample (load dataframe):

scala> val sqlContext = new org.apache.spark.sql.SQLContext(sc)

scala> import sqlContext.sql

scala> val df = sqlContext.read.format(“com.databricks.spark.csv”).option(“header”, “true”).option(“inferSchema”, “true”).load(“taxi+_zone_lookup.csv”)

scala> df.columns

scala> df.count()

scala> df.printSchema()

scala> df.show(2)

scala> df.select(“Zone”).show(10)

scala> df.filter(df(“LocationID”) <= 11).select(“LocationID”).show(10)

scala> df.groupBy(“Zone”).count().show()

scala> df.registerTempTable(“B_friday”)

scala> sqlContext.sql(“select Zone from B_friday”).show()

Machine Learning part:

import org.apache.spark.ml.feature.RFormula

Scala IDE:

Eclipse

Reference:

Problem:

“Failed to find Spark jars directory (/Users/bh/downloads/spark/assembly/target/scala-2.10/jars).

You need to build Spark with the target “package” before running this program.”

Solution:

$ ./build/sbt assembly

$ build/sbt package

Then it’s good to operate:

~/downloads/spark$ bin/spark-shell

(This is the Scala version)

Problem:

Your PYTHONPATH points to a site-packages dir for Python 2.x but you are running Python 3.x!

PYTHONPATH is currently: “/Users/bridgethuang/downloads/spark/python/lib/py4j-0.10.4-src.zip:/Users/bridgethuang/downloads/spark/python/:”

You should `unset PYTHONPATH` to fix this.

Solution:

export PYTHONPATH=$PYTHONPATH:/usr/local/lib/python3.6/site-packages

Then it’s good to operate:

~/downloads/spark$ ./bin/pyspark

(This is the Pyspark version)

Pyspark in iPython Notebook:

$pip install findspark

import findspark

findspark.init()

from pyspark import SparkContext

from pyspark import SparkConf

Problem:

bin/spark-shell: line 57: /Users/bridgethuang//bin/spark-submit: No such file or directory

Problem: pip 10.0.1 gets warning “ModuleNotFoundError: No module named ‘pip._internal’ “

Solution: python3 -m pip uninstallspark

instead of “pip install spark”

Spark 2.1.0 doesn’t support python 3.6.0.

conda create -n py35 python=3.5 anaconda

source activate py35

where to find Bash_profile

$~/.bash_profile

name ‘execfile’ is not defined

Python2: execfile(filename, globals, locals)

Python3: exec(compile(open(filename, “rb”).read(), filename, ‘exec’), globals, locals)

Spark Scala Documentation

RDD

Resilient Distributed Dataset

Install/upgrade Pyspark

Download new package: http://spark.apache.org/downloads.html

$ tar -xzf spark-1.2.0-bin-hadoop2.4.tgz

$ sudo mv spark-1.2.0-bin-hadoop2.4 /opt/spark-1.2.0

$ sudo ln -s /opt/spark-2.3.0 /opt/spark̀

$ export SPARK_HOME=/opt/spark

$ export PATH=$SPARK_HOME/bin:$PATH

Problem:

Solution:

$ unset SPARK_HOME

$ ipython notebook –profile=pyspark

Scala Cheatsheet

Spyder Installation:

Spark Rdd Cheat Sheet Scala

$ conda install spyder

reference: https://gist.github.com/ololobus/4c221a0891775eaa86b0

$ tar xvf spark-2.4.0-bin-hadoop2.7.tgz

$ mv spark-2.4.0-bin-hadoop2.7 /opt/spark-2.4.0

$ ln -s /opt/spark-2.4.0 /opt/spark

$ export SPARK_HOME=/opt/spark

$ export PATH=$SPARK_HOME/bin:$PATH

Spark Sql Scala Cheat Sheet

# the path can be written in ./.bachrc file

To configure Spark working with Jupyter notebook and Anoaconda

Scala Spark Sql

In .bash_profile doc:

alias snotebook=’$SPARK_PATH/bin/pyspark –master local[2]’

$snotebook

Spark Scala Version

Reference:

Spark Scala Cheat Sheet Pdf

Thanks to Brendan O’Connor, this cheatsheet aims to be a quick reference of Scala syntactic constructions. Licensed by Brendan O’Connor under a CC-BY-SA 3.0 license.

| variables | |

Good | Variable. |

Bad | Constant. |

| Explicit type. | |

| functions | |

| Good Bad | Define function. Hidden error: without = it’s a procedure returning Unit; causes havoc. Deprecated in Scala 2.13. |

| Good Bad | Define function. Syntax error: need types for every arg. |

| Type alias. | |

| vs. | Call-by-value. Call-by-name (lazy parameters). |

| Anonymous function. | |

| vs. | Anonymous function: underscore is positionally matched arg. |

| Anonymous function: to use an arg twice, have to name it. | |

| Anonymous function: block style returns last expression. | |

| Anonymous functions: pipeline style (or parens too). | |

| Anonymous functions: to pass in multiple blocks, need outer parens. | |

| Currying, obvious syntax. | |

| Currying, obvious syntax. | |

| Currying, sugar syntax. But then: | |

| Need trailing underscore to get the partial, only for the sugar version. | |

| Generic type. | |

| Infix sugar. | |

| Varargs. | |

| packages | |

| Wildcard import. | |

| Selective import. | |

| Renaming import. | |

Import all from java.util except Date. | |

| At start of file: Packaging by scope: Package singleton: | Declare a package. |

| data structures | |

Tuple literal (Tuple3). | |

| Destructuring bind: tuple unpacking via pattern matching. | |

| Bad | Hidden error: each assigned to the entire tuple. |

| List (immutable). | |

| Paren indexing (slides). | |

| Cons. | |

| same as | Range sugar. |

| Empty parens is singleton value of the Unit type. Equivalent to void in C and Java. | |

| control constructs | |

| Conditional. | |

same as | Conditional sugar. |

| While loop. | |

| Do-while loop. | |

| Break (slides). | |

same as | For-comprehension: filter/map. |

same as | For-comprehension: destructuring bind. |

same as | For-comprehension: cross product. |

For-comprehension: imperative-ish.sprintf style. | |

| For-comprehension: iterate including the upper bound. | |

| For-comprehension: iterate omitting the upper bound. | |

| pattern matching | |

| Good Bad | Use case in function args for pattern matching. |

| Bad | v42 is interpreted as a name matching any Int value, and “42” is printed. |

| Good | `v42` with backticks is interpreted as the existing val v42, and “Not 42” is printed. |

| Good | UppercaseVal is treated as an existing val, rather than a new pattern variable, because it starts with an uppercase letter. Thus, the value contained within UppercaseVal is checked against 3, and “Not 42” is printed. |

| object orientation | |

Constructor params - x is only available in class body. | |

| Constructor params - automatic public member defined. | |

| Constructor is class body. Declare a public member. Declare a gettable but not settable member. Declare a private member. Alternative constructor. | |

| Anonymous class. | |

| Define an abstract class (non-createable). | |

| Define an inherited class. | |

| Inheritance and constructor params (wishlist: automatically pass-up params by default). | |

| Define a singleton (module-like). | |

| Traits. Interfaces-with-implementation. No constructor params. mixin-able. | |

| Multiple traits. | |

| Must declare method overrides. | |

| Create object. | |

| Bad Good | Type error: abstract type. Instead, convention: callable factory shadowing the type. |

| Class literal. | |

| Type check (runtime). | |

| Type cast (runtime). | |

| Ascription (compile time). | |

| options | |

| Construct a non empty optional value. | |

| The singleton empty optional value. | |

| but | Null-safe optional value factory. |

| same as | Explicit type for empty optional value. Factory for empty optional value. |

| Pipeline style. | |

| For-comprehension syntax. | |

| same as | Apply a function on the optional value. |

| same as | Same as map but function must return an optional value. |

| same as | Extract nested option. |

| same as | Apply a procedure on optional value. |

| same as | Apply function on optional value, return default if empty. |

| same as | Apply partial pattern match on optional value. |

| same as | true if not empty. |

| same as | true if empty. |

| same as | true if not empty. |

| same as | 0 if empty, otherwise 1. |

| same as | Evaluate and return alternate optional value if empty. |

| same as | Evaluate and return default value if empty. |

| same as | Return value, throw exception if empty. |

| same as | Return value, null if empty. |

| same as | Optional value satisfies predicate. |

| same as | Optional value doesn't satisfy predicate. |

| same as | Apply predicate on optional value or false if empty. |

| same as | Apply predicate on optional value or true if empty. |

| same as | Checks if value equals optional value or false if empty. |